Ethical Dilemmas and the Human Cost of AI Progress

AI isn’t coming — it’s already here. From patient diagnostics to workforce planning, algorithms now play a central role in how critical decisions get made. In healthcare staffing, systems powered by machine learning are screening applicants, assessing qualifications, and automating scheduling faster than any human team could. But scale brings risk. As AI systems make high-impact choices, the consequences of flawed data, biased models, or opaque decision-making grow harder to ignore. These aren’t abstract concerns. They affect who gets hired, who gets care, and who takes the blame when something goes wrong.

At the heart of it all is a growing dilemma: how to embrace the efficiency of AI without losing sight of fairness, transparency, and accountability. As industries move forward with automation, the question isn’t whether to use AI — it’s how to do it responsibly, without letting human cost become collateral damage.

AI Bias in Hiring Algorithms

AI is often seen as a neutral solution to human bias. But in hiring, especially in healthcare, that assumption can backfire fast. Algorithms trained on past data inherit the same preferences, gaps, and exclusions that shaped those decisions. What looks like automation can quietly replicate discrimination — at scale.

AI is often seen as a neutral solution to human bias. But in hiring, especially in healthcare, that assumption can backfire fast. Algorithms trained on past data inherit the same preferences, gaps, and exclusions that shaped those decisions. What looks like automation can quietly replicate discrimination — at scale.

The impact on healthcare staffing is significant:

- Qualified professionals may be screened out before human review

- Staffing pipelines become less diverse, risking compliance and equity

- Systems may favor predictability over potential, limiting innovation in care delivery

Left unchecked, AI bias doesn’t just shape hiring — it shapes who gets to participate in the future of care.

Machine Decisions in Healthcare Who’s Liable

As AI systems take on more responsibility in healthcare operations, the question of liability is becoming harder to avoid. From screening clinicians to matching them with shifts and facilities, automation is influencing outcomes that directly impact care quality and legal compliance. But when the system makes the wrong call, who takes responsibility?

The answer is often unclear. Healthcare providers may assume the technology is vetted. Vendors point to the data or disclaim liability in their contracts. Meanwhile, bad decisions like credential mismatches, overlooked candidates, or flawed assignments can lead to compliance violations or patient risk. A 2024 Deloitte survey found that 68% of healthcare executives were unsure who holds legal accountability when AI systems drive workforce decisions.

Key risks include:

- Legal exposure from improper staffing or discriminatory automation

- Erosion of trust among staff and stakeholders

- Lack of recourse for candidates affected by flawed outcomes

In a high-stakes sector like healthcare, decision-making without clear accountability isn’t just risky it’s dangerous.

The Economic Fallout of Automation

In a mid-sized hospital on the East Coast, the scheduling team once buzzed with activity — juggling phone calls, compliance checks, and last-minute cancellations. Today, most of that work is done in silence, handled by a workforce platform powered by AI. What once took hours now takes seconds. What once required five people now needs two.

Automation is transforming the structure of healthcare staffing. AI systems can process credentials, allocate shifts, and onboard clinicians with unmatched speed. But this efficiency comes at a cost. A 2024 McKinsey study projects that up to 22% of administrative roles in healthcare are at risk of automation by 2030. The real disruption isn’t just job loss — it’s the mismatch between how fast technology moves and how slowly organizations adapt. Without a plan to retrain and realign, staffing ecosystems can fracture.

Ignore the shift, and organizations lose capacity. Manage it strategically, and they gain competitive advantage.

The Legal Lag in AI Policy

The law hasn’t caught up — and AI isn’t waiting.

While healthcare staffing platforms accelerate automation, regulations remain rooted in outdated frameworks. Hiring laws like the EEOC weren’t designed for black-box algorithms. HIPAA covers data privacy — but not decision logic. Meanwhile, AI systems continue to make hiring, scheduling, and credentialing calls with minimal legal oversight.

A 2024 IBM survey found that 61% of compliance leaders believe current laws are “inadequate” for AI-driven staffing decisions.

Most AI use in staffing today operates in a legal gray zone — not because it’s illegal, but because it’s unregulated.

No federal standard exists for algorithmic accountability in hiring. Enforcement is fragmented across jurisdictions. Risk is high, but responsibility is murky

Every decision made by an unregulated system is a liability waiting to surface.

Solving for Bias Building for Trust

Fixing AI bias starts with owning responsibility not offloading it to the algorithm.

In healthcare staffing, trust is everything. That trust erodes when candidates are misjudged by opaque systems or excluded due to flawed data. The future isn’t about eliminating human involvement it’s about using AI to assist, not replace, critical decisions. According to Gartner, 74% of organizations using AI plan to implement human review checkpoints by 2026 a clear signal that human-in-the-loop (HITL) systems are becoming the standard.

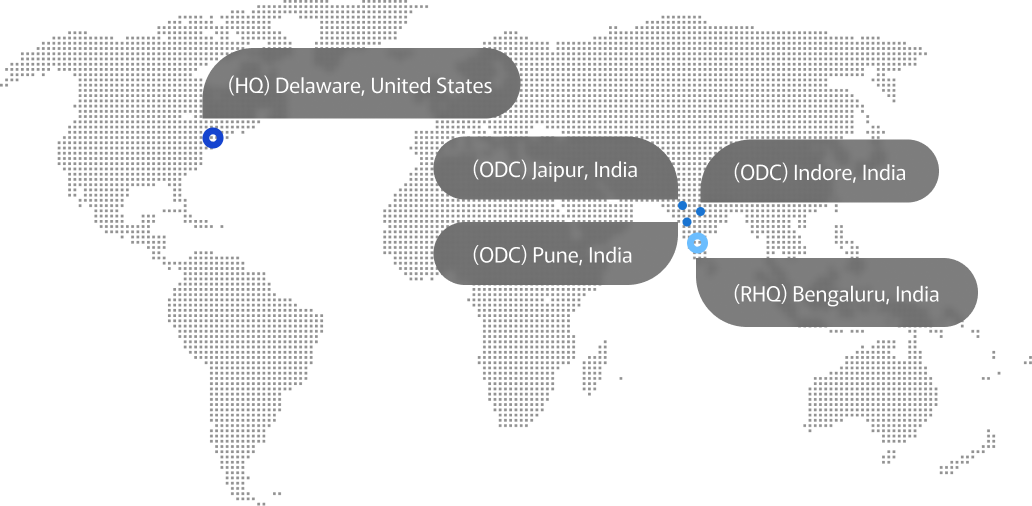

At Advayan, we don’t leave fairness to chance. We’ve built our model to ensure accuracy, accountability, and transparency at every stage of the staffing process.

How Advayan solves for ethical AI use:

- We combine algorithmic speed with human-led credential review

- We regularly audit tools and data pipelines to reduce systemic bias

- We build every placement on a foundation of equity, compliance, and clinical relevance

Because when the stakes are this high, trust isn’t a feature it’s the service.

How Advayan Leads the Future of Ethical Staffing

At Advayan, we believe AI should empower people — not erase them. That’s why our healthcare staffing model is built around a core principle: technology must serve clinical integrity, compliance, and human judgment. While others race to automate, we focus on getting it right. Our systems are designed with built-in transparency, regular audits, and human-in-the-loop reviews — ensuring every staffing decision is both efficient and ethically sound.

We partner with healthcare organizations navigating AI disruption, helping them modernize operations without compromising on fairness or trust. From credentialing safeguards to equitable hiring workflows, our approach scales smart automation without cutting corners on accountability. In an industry where lives depend on the right people in the right roles, shortcuts aren’t an option.

Conclusion

AI isn’t neutral. Every automated decision has real-world consequences. As regulations struggle to catch up, the cost of getting it wrong grows higher legally, ethically, and operationally. The future belongs to staffing partners who combine speed with responsibility. Advayan is that partner.