Across boardrooms and budget cycles, AI strategy is being reduced to a headcount problem. Hire more AI engineers. Pay the premium. Hope velocity follows. Yet many enterprises now sit with impressive AI teams—and underwhelming results. Models stall in pilots. Revenue teams don’t trust outputs. Compliance teams scramble after the fact. The issue isn’t talent quality; it’s organizational readiness. AI is not a role you fill. It’s a system you enable. When enablement lags, even elite engineers become isolated specialists, and AI turns into an expensive science project. The real tension isn’t hiring versus not hiring. It’s over-hiring specialists while under-training the organization that must actually use, govern, and monetize AI.

The AI Hiring Frenzy—and Why It’s Rational but Incomplete

Enterprises are responding logically to market signals. AI talent is scarce. Salaries are high. GenAI tooling is exploding. Competitors are announcing “AI-first” roadmaps. In that environment, hiring becomes the fastest visible action.

Common drivers behind the surge:

- Fear of being outpaced by AI-native competitors

- Pressure from boards and investors to “show AI progress”

- Tool vendors promising quick wins with the right technical hires

This explains the rush, but it doesn’t guarantee outcomes. AI engineers are necessary, not sufficient. When they enter organizations without shared data standards, unclear decision rights, and untrained frontline teams, their work bottlenecks. Models get built, but adoption stalls. Value leaks quietly.

What’s rarely acknowledged is that AI productivity scales only when the surrounding system—processes, incentives, governance, and skills—can absorb it.

The Blind Spot: Under-Trained Teams as the Real AI Bottleneck

The least discussed risk in enterprise AI adoption isn’t model accuracy. It’s organizational friction.

Most AI initiatives fail downstream of engineering. Sales teams don’t know how to operationalize predictions. Marketing teams mistrust automated insights. Operations teams override recommendations “just in case.” Compliance teams are brought in too late.

This creates four hidden costs:

- Decision latency: AI outputs exist, but humans hesitate or misinterpret them

- Shadow processes: Teams recreate manual workflows alongside AI systems

- Revenue leakage: Predictive insights fail to translate into actions

- Compliance exposure: Models operate without consistent governance

In these environments, AI engineers are blamed for “lack of impact,” when the real issue is enablement debt. Without systematic training for non-AI roles—revenue leaders, operators, analysts, compliance stakeholders—AI becomes a narrow technical layer instead of a business multiplier.

This is where AI workforce strategy quietly diverges from AI hiring strategy. One builds capability. The other accumulates cost.

When Great AI Engineers Still Fail

Even top-tier AI talent struggles inside misaligned organizations. Not because they lack skill, but because they lack context.

Common failure patterns include:

- Engineers optimizing models without clarity on revenue or risk priorities

- Product teams unable to translate business questions into AI-ready problems

- Data scientists blocked by inconsistent data ownership and definitions

AI engineers do their best work when business logic is explicit. Without it, they over-engineer, mis-prioritize, or stall. The irony is sharp: enterprises hire rare talent, then place them inside environments that prevent leverage.

The result is frustration on both sides. Leaders see limited ROI. Engineers see limited impact. Attrition follows. Costs rise again.

Over-Hiring vs. Enablement-Led AI Strategy

| Dimension | Over-Hiring AI Engineers | Enablement-Led AI Strategy |

| Primary investment | Specialized headcount | Organizational capability |

| Speed to visible action | Fast | Moderate but compounding |

| Adoption across teams | Low | High |

| Risk & compliance posture | Reactive | Designed-in |

| Long-term ROI | Uncertain | Durable and scalable |

Enablement-led strategies treat AI as an enterprise operating model, not a lab function. Training isn’t generic “AI literacy.” It’s role-specific, outcome-driven, and tied to how decisions are actually made.

This is where leading consultancies increasingly focus—not on adding more tools or people, but on orchestrating how AI fits into revenue systems, compliance frameworks, and performance management.

Tools Everywhere, Strategy Nowhere

The market is flooded with copilots, platforms, and automation layers. Most are powerful. Many are poorly integrated.

The failure mode is predictable: tools are deployed before workflows are redesigned. Automation amplifies confusion instead of clarity. “AI-first” becomes a slogan rather than an execution discipline.

Enterprises that avoid this trap align three layers before scaling tools:

- Decision ownership: who trusts and acts on AI outputs

- Data discipline: shared definitions tied to business metrics

- Enablement paths: training that maps AI insights to daily actions

This is less visible than hiring announcements, but far more decisive.

The Cost No One Budgets For: Enablement Debt

Every AI initiative carries an invisible liability: enablement debt. It accumulates when organizations deploy models faster than they build the skills, incentives, and governance to use them. Unlike technical debt, enablement debt doesn’t trigger alerts. It shows up as missed targets, slow decisions, and quiet resistance.

Symptoms surface across the enterprise:

- Revenue teams discount AI-driven forecasts because they don’t understand confidence levels

- Operations teams override recommendations to protect legacy KPIs

- Compliance teams retrofit controls after models are already live

Each workaround erodes trust. Over time, leaders conclude that “AI didn’t deliver,” when in reality the organization never learned how to operate with it.

Enterprises that outperform treat enablement as infrastructure. They design training pathways tied to roles and decisions. They define how AI changes accountability, not just productivity. They measure adoption as rigorously as model performance.

Tactical AI Spend vs. Strategic AI Investment

| Lens | Tactical AI Spend | Strategic AI Investment |

| Objective | Quick capability | Sustained performance |

| Budget focus | Tools & talent | Systems & alignment |

| Success metric | Models deployed | Decisions improved |

| Time horizon | Quarterly | Multi-year |

| Failure mode | Fragmentation | Continuous learning |

Tactical spend feels decisive. Strategic investment feels slower. Only one compounds.

This distinction matters because AI value rarely arrives in a single leap. It emerges as feedback loops tighten—between data, models, people, and incentives. Without intentional design, those loops never close.

Why “AI-First” Narratives Collapse in Execution

“AI-first” has become shorthand for ambition, but ambition without structure is noise. Many enterprises declare AI-first while leaving core operating models unchanged. Decisions remain siloed. Data remains fragmented. Accountability remains human-only.

The result is predictable:

- AI insights compete with intuition instead of informing it

- Automation accelerates flawed processes

- Leaders struggle to separate signal from novelty

AI-first should not mean tool-first. It should mean decision-first. Which decisions matter most? Where does uncertainty cost the business real money? Which teams must change behavior for AI to matter?

Until those questions are operationally answered, no volume of tooling or hiring will compensate.

Reframing AI Workforce Strategy for Enterprise Scale

The most effective AI workforce strategies invert the usual sequence.

Instead of:

Hire → Deploy → Hope for adoption

They follow:

Clarify decisions → Enable roles → Scale capability

This reframing changes everything. Hiring becomes targeted. Training becomes contextual. Tools become accelerants, not crutches. AI engineers operate with clear business intent. Non-AI teams gain confidence and fluency.

Critically, compliance and governance stop being blockers and become design inputs. That shift alone prevents costly rewrites and reputational risk.

This is where enterprises begin to see AI not as a cost center or innovation theater, but as a performance system.

The Quiet Advantage of Orchestration

As AI initiatives scale, complexity rises nonlinearly. Data intersects with regulation. Revenue optimization intersects with customer trust. Speed intersects with risk.

Managing these tensions requires orchestration—not heroics. It requires aligning technology, people, and incentives around measurable outcomes. Enterprises that master this don’t necessarily hire the most AI engineers. They enable the organization to work intelligently with the ones they have.

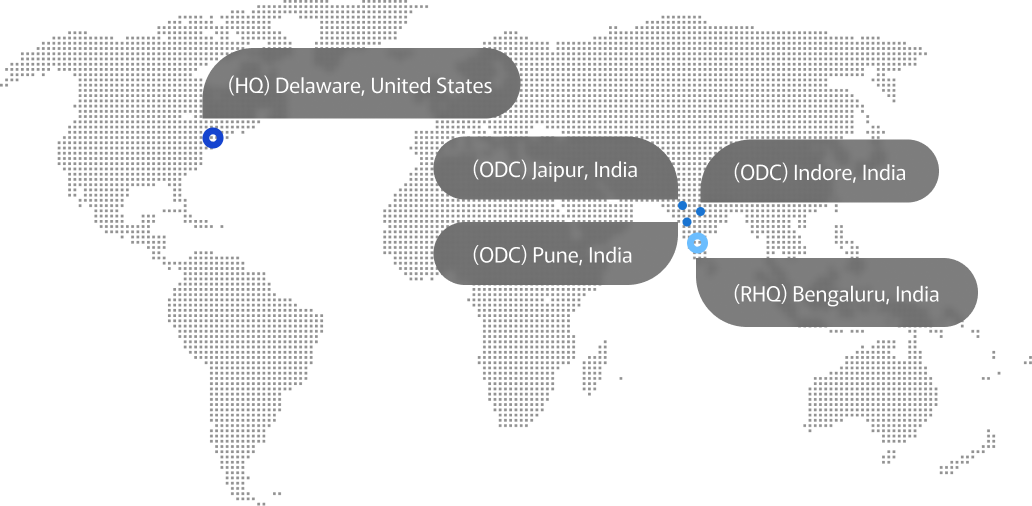

Advayan’s work across modern revenue systems, AI performance optimization, and compliance-driven growth reflects this reality. The most durable advantage isn’t experimentation. It’s execution discipline at scale.

Conclusion

Enterprises aren’t failing at AI because they lack talent. They’re failing because they mistake hiring for strategy. AI delivers value only when organizations are trained, aligned, and designed to act on it. Over-hiring without enablement inflates cost and disappointment. Enablement-led AI workforce strategy builds fluency, trust, and compounding returns. In a market crowded with tools and titles, strategic clarity remains the rarest asset—and the one that separates AI ambition from enterprise impact.